Large Language Models (LLMs) are amazing at completing complex tasks: they can automate browsers, write code, and more. But anyone who has worked with them knows that not all models are equal. The smaller, cheaper ones often fail miserably on tasks that bigger ones solve with ease. I’ve seen it happen many times.

That’s where my idea of Recipe Generation comes in. A recipe is nothing more than a distilled set of instructions the model can follow to repeat a task it already solved successfully. Think of it as procedural memory for AI: instead of starting from scratch every time, the agent learns a reusable algorithm.

The Analogy: My Parents and Computers

This idea actually came from my own family. Years ago, when I was teaching my parents how to use computers, they would keep a little notebook at hand. Every time I explained how to do something (like saving a photo from WhatsApp Web), they would carefully write down the steps:

- Open WhatsApp Web.

- Find the photo.

- Click it to expand.

- Press the download button.

This became their personal algorithm library. Whenever they had to do the task again, they didn’t rely on memory or experimentation: they just followed the recipe. That’s exactly what I want my AI agents to do.

How Recipe Generation Works

When a larger model succeeds at a task, I ask it to produce a recipe: a short, Markdown-formatted guide with the essential steps, tools, and placeholders. No detours, no failed attempts: just the direct path to success. If the model made a mistake earlier that should be avoided, the recipe includes a warning.

These recipes are then stored locally in a long-term memory (either RAG or filesystem). Next time I (or the model) attempt a similar task, the system can retrieve the recipe and inject it directly into the model’s context. The result: more consistency, less wasted computation, and higher success rates.

My Experiment

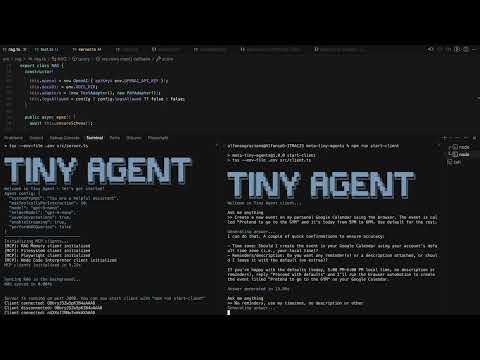

Here’s a real example. I asked two models to create a new event on my Google Calendar with a specific title, start time and end time.

- GPT-5-nano: failed repeatedly. No matter how many times I tried, it couldn’t get it right.

- GPT-5-mini: solved it on the first try. Bigger model, better reasoning.

Then I asked GPT-5-mini to generate a recipe for the task. After saving it, I retried with GPT-5-nano, this time giving it the recipe in its context.

The result? GPT-5-nano nailed it on the first attempt.

This simple test showed me that recipes can let smaller models complete tasks that were previously out of reach. As a side effect, even bigger models benefit from recipes because they don’t waste time on trial-and-error as they already have a proven high-level plan.

Note: the video has been speed-up. Somehow it’s still very slow with weak models, I’ve found way better results with gemini-2.0-flash in terms of speed

The recipe generation prompt

This is the code generating the Markdown recipe. The input is the full conversation (including tools usage). The model will then distill all the working steps performed to achieve a task.

Why This Matters

- Bridging the gap: Smaller models can now handle tasks that normally require larger ones.

- Efficiency: Bigger models also improve because they skip redundant exploration.

- Memory: Recipes act as a persistent knowledge base for tasks the agent has already mastered.

- Transparency: Recipes are human-readable, so I can inspect or tweak them.

Challenges

Of course, this isn’t without issues:

- Recipes must stay general enough to be reused but specific enough to work (this required a bit of prompt optimization on the prompt that generates the recipe).

- A bad recipe can encode mistakes.

- Interfaces and APIs change, which means recipes may need maintenance.

This is still a very early stage experiment. I’ve only tested it on a small set of use cases, and while the results look promising, it clearly needs more systematic testing and evaluation before drawing strong conclusions.

Conclusion

I think of Recipe Generation as teaching my AI agents the same way I once taught my parents to use a computer. Instead of reinventing the wheel each time, the agent writes down the steps and reuses them. My experiment with Google Calendar showed that even the weakest models can perform reliably when given a good recipe, and stronger models become more efficient too.